26 Nov 2009

I’m writing a skinning sample for a future Android SDK. This has prompted me

to construct a toolchain to get skinned animated models into Android

applications.

I’m really only just getting started, but so far I’m thinking along these

lines:

Wings 3D -> Blender 2.5 -> FBX ASCII -> ad-hoc tool -> ad-hoc binary file ->

ad-hoc loader.

For what it’s worth, right now Blender 2.5 doesn’t support FBX export, so I

have to do Collada -> FBX Converter -> FBX. But Blender 2.5 support should be

ready fairly soon.

You may wonder why I’m considering using the proprietary and undocumented FBX

standard rather than the open source Collada standard. I’m doing it for the

same reason so many other tool chains (e.g. Unity, XNA) do, namely that FBX is

a simpler format that just seems to work more reliably when exchanging data

between DCC applications.

26 Nov 2009

The open-source Blender 3D DCC tool has long

suffered from an ugly, non-standard, hard-to-learn UI. I’m very happy to see

that the latest release, which just entered alpha status, has a much improved

UI. It’s not quite Modo quality, but it’s starting to get into the same league

as commercial DCC tools.

25 Nov 2009

Yahoo continues to be a source of excellent information on recent and future

versions of JavaScript / ECMAScript. Here are two very good talks from

November 2009 about the evolution of JavaScript from 2000 until now:

Doug Crockford on ECMAScript 4/5

Brendan Eich on ECMAScript 4 / 5 and Harmony

25 Nov 2009

In the late 1980’s Jordan Mechner single-handedly designed and programmed the

original Apple II version of

Prince of Persia and

several other groundbreaking games. He published his

development journals and

technical design documents on his web site.

The journals touch on:

- The development of the stop-motion animation techniques used so effectively in PoP

- The tension in Jordan’s life between his two callings: game development and feature film scriptwriting / directing.

- The difficulties involved in developing and selling a new game idea.

- A view into the late 80’s / early 90’s pre-web game development business.

Although many games are now written by large teams of people, in the end, a

lot of the business, artistic, and technical issues Jordan writes about remain

relevant. I highly recommend these journals for anyone interested in an inside

view of game development. (Or anyone interested in trying to break into

Hollywood. :-) )

11 Nov 2009

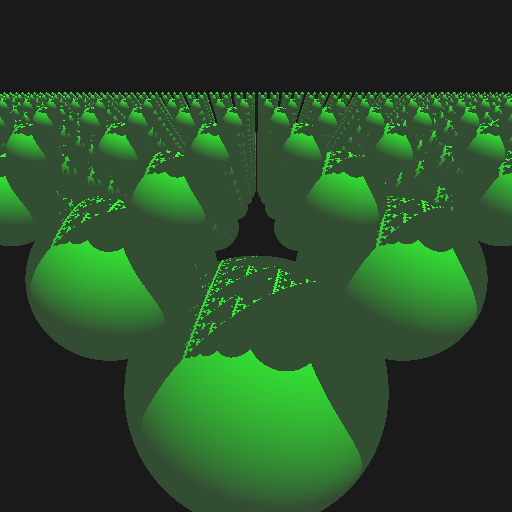

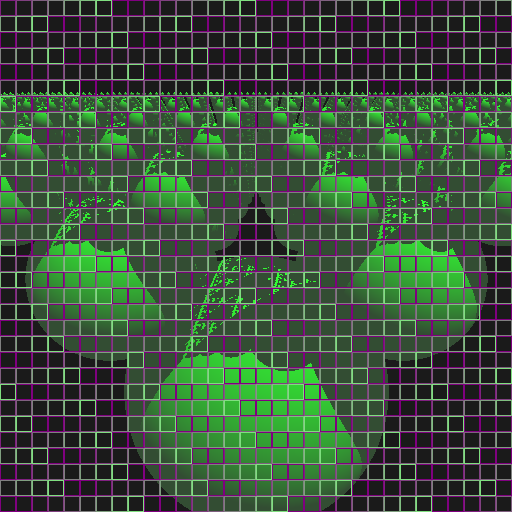

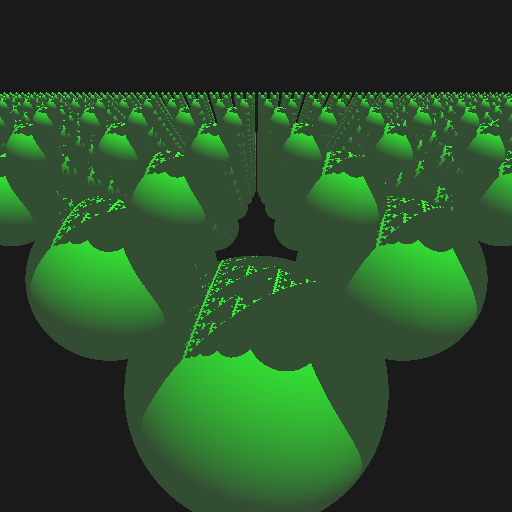

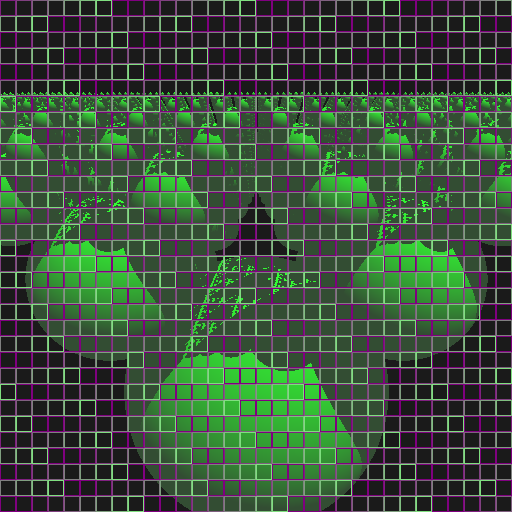

Above is the output of the raytracer. Below is a diagnostic mode showing which

goroutines raytraced which section of the screen. Each goroutine has its own

color to outline the pixels it traces:

I wrote a simple multi-threaded ray tracer in Google’s new “go” language. It’s

an adaptation of Flying Frog Consultancy’s

Raytracer.

It runs single-threaded about 1/2 the speed of a comparable C++ version. I

guess the C++ version benefits from a more optimizing compiler and the ability

to inline small functions.

Compared to ordinary C/C++, the Go version was easier to multithread.

On my dual-core Macbook Pro I get an 1.80x speedup when running with

GOMAXPROCS > 1:

$ GOMAXPROCS=1 time ./gotrace

**1.52** real 1.50 user 0.01 sys

$ GOMAXPROCS=2 time ./gotrace

**0.82** real 1.50 user 0.01 sys

$ GOMAXPROCS=3 time ./gotrace

**0.81** real 1.50 user 0.01 sys

On an eight-core, 16 Hyperthread HP Z600 running Ubuntu 9.10, (with the source

code changed to use 16 goroutines instead of the default 8 goroutines) I get a

5.8x speedup:

$ GOMAXPROCS=1 time ./gotrace

1.05user 0.01system 0:01.06elapsed 99%CPU (0avgtext+0avgdata 0maxresident)k

0inputs+1544outputs (0major+2128minor)pagefaults 0swaps

$ GOMAXPROCS=16 time ./gotrace

1.32user 0.00system 0:00.18elapsed 702%CPU (0avgtext+0avgdata 0maxresident)k

0inputs+1544outputs (0major+2190minor)pagefaults 0swaps

Source code gotracer.zip

07 Nov 2009

I’ve been working part time on the “Droid” project for the last year, and it

finally shipped today. I wrote some of the graphics libraries.

At first I was skeptical of the industrial design. The early prototypes looked

very much like a 1970’s American car dashboard. But Motorola really improved

the industrial design in subsequent versions. I like the high-grip rubber on

the battery cover. It makes the phone very comfortable to hold.

The phone hardware is very fast, and the high resolution screen is beautiful.

I think people will enjoy owning and using this phone.

My favorite feature is the turn-by-turn navigation software. My second

favorite feature is the “Droooid” default notification sound. Oh, and the

camera flashlight is very nice too!

07 Nov 2009

Man, the web really does change everything!

The company “Demand Media” has

developed a Google-like business model for creating how-to videos:

- Use data mining to figure out what people are searching for.

- Use semantic database to figure out what their search queries mean. (e.g. “how to draw a gun” vs. “how to draw a flower”.)

- Find out how much advertisers are willing to pay for an ad that appears next to the video.

- Look at how much content already exists to answer the question.

- Use 1-4 to calculate the expected lifetime value of the how-to video.

- Automate the process of matching freelance writers, videographers, editors, and fact checkers to create the video as inexpensively as possible. (In the $30 per video range.)

- Host the resulting videos on Youtube (and other video sites) and monetize with ad keywords.

They say that the algorithm produces lifetime values about 5 times higher than

human editors do.

These guys have basically automated the “How to” video market. Amazing! I

wonder if they will move up the video food chain into music videos, TV shows

or movies? It might work for low budget children’s programming.

Or even for casual games. Hmm.

20 Oct 2009

I was pleasantly surprised to see that the latest version of Ubuntu, 9.10 b1,

comes with a nice selection of desktop backgrounds. This makes a huge

difference in how beginners perceive the product, at very little cost to

Ubuntu. The new dark-brown default color scheme is also an improvement over

the older schemes.

19 Oct 2009

Now that the Android Native Development Kit has been released, I was finally

able to publish the sources and binary to my Android Terminal Emulator.

It’s a fairly plain Digital Equipment Corporation VT-100 terminal emulator, with some features from newer

terminals (like colors.)

This is my first application in the Android Market. There are at least 10

other terminal emulators in the market; we’ll see how this one is received.

A bit of history: This was my first Android application. I wrote it for the

original “Sooner” Android devices, and have kept it updated for the successive

generations of devices. It requires native code because there’s no public

Android Java APIs for dealing with PTYs.

A bit of ancient history: I wrote two terminal emulators for the Atari 800

back in the day: Chameleon, in 6502 assembly, and a version of Kermit in the

excellent “Action!” programming language. Kermit was one of my first “open

source” programs. If you poke around on the web you can still find it

here and there.

02 Oct 2009

I’ve just published the source code to

a port of Quake to the Android platform.

This is something I did a while ago as a internal test application.

It’s not very useful as a game, because there hasn’t been any attempt to

optimize the controls for the mobile phone. It’s also awkward to install

because the end user has to supply the Quake data files on their own.

Still, I thought people might enjoy seeing a full-sized example of how to

write a native code video game for the Android platform. So there it is, in

all its retro glory.

(Porting Quake II or Quake III is left as an exercise for the reader. :-)

What’s different about this particular port of Quake?

Converted the original application into a DLL

Android applications are written in Java, but they are

allowed to call native languge DLLs, and the native language DLLs are allowed

to call a limited selection of OS APIs, which include OpenGL ES and Linux File

I/O.

I was able to make the game work by using Java for:

- The Android activity life-cycle

- Event input

- View system integration

- EGL initialization

- Running the game in its own rendering loop, separate from the UI thread

And then I use C++ for the rest. The interface between Java and C is pretty

simple. There are calls for:

- initialize

- step - which does a game step, and draws a new frame.

- handle an input event (by setting variables that are read during the “step” call.)

- quit

The Java-to-C API is a little hacky. At the time I developed this game I

didn’t know very much about how to use JNI, so I didn’t implement any C-to-

Java calls, preferring to pass status back in return values. For example the

“step” function returns a trinary value indicating:

- That the game wants raw PC keyboard events, because it is in normal gameplay mode.

- That it wants ASCII character events, because it is in menu / console mode.

- That the user has chosen to Quit.

This might better have been handled by providing a series of Java methods that

the C code could call back. (But using the return value was easier. :-))

Similarly, the “step” function takes arguments that give the current screen

size. This is used to tell the game when the screen size has changed. It would

be cleaner if this was communicated by a separate API call.

Converted the Quake code from C to C++

I did this early in development, because I’m more

comfortable writing in C++ than in C. I like the ability to use classes

occasionally. The game remains 99% pure C, since I didn’t rewrite very much of

it. Also, as part of the general cleanup I fixed all gcc warnings. This was

fairly easy to do, with the exception of aliasing warnings related to the

“Quake C” interpreter FFI.

Converted the graphics from OpenGL to OpenGL ES

This was necessary to run on the Android platform. I did a straightforward

port. The most difficult part of the port was implementing texture conversion

routines that replicated OpenGL functionality not present in OpenGL ES.

(Converting RGB textures to RGBA textures, synthesizing MIP-maps and so

forth.)

Implemented a Texture Manager

The original glQuake game allocated

textures as needed, never releasing old textures. It relied upon the OpenGL

driver to manage the texture memory, which in turn relied on the Operating

System’s virtual memory to store all the textures. But Android does not have

enough RAM to store all the game’s textures at once, and the Android OS does

not implement a swap system to offload RAM to the Flash or SD Card. I worked

around this by implementing my own ad-hoc swap system. I wrote a texture

manager that uses a memory mapped file (backed by a file on the SD Card) to

store the textures, and maintained a limited size LRU cache of active textures

in the OpenGL ES context.

Faking a PC Keyboard

Quake expects to talk to a PC keyboard as its primary

input device. It handles raw key-down and key-up events, and handles the shift

keys itself. This was a problem for Android because mobile phone devices have

a much smaller keyboard. So a character like ‘[’ might be unshifted on a PC

keyboard, but is shifted on the Android keyboard.

I solved this by translating

Android keys into PC keys, and by rewriting the config.cfg file to use a

different set of default keys to play the game. But my solution is not

perfect, because it is essentially hard-coded to a particular Android device.

(The T-Mobile G1). As Android devices proliferate there are likely to be new

Android devices with alternate keyboard layouts, and they will not necessarily

be able to control the game effectively using a G1-optimized control scheme.

A better approach would be to redesign the game control scheme to avoid needing

keyboard input.

A Few Words about Debugging

I did the initial bring-up of

Android Quake on a Macintosh, using XCode and a special build of Android

called “the simulator”, which runs a limited version of the entire Android

system as a native Mac OS application. This was helpful because I was able to

use the XCode visual debugger to debug the game.

Once the game was limping

along, I switched to debugging using “printf”-style log-based debugging and

the emulator, and once T-Mobile G1 hardware became available I switched to

using real hardware.

Using printf-style debugging was awkward, but sufficient

for my needs. It would be very nice to have a source-level native code

debugger that worked with Eclipse.

Note that the simulator and emulator use a

software OpenGL ES implementation, which is slightly different than the

hardware OpenGL ES implementation available on real Android devices. This

required extra debugging and coding. It seems that every time Android Quake is

ported to a new OpenGL ES implementation it exposes bugs in both the game’s

use of OpenGL ES and the device’s OpenGL ES implementation.

Oh, and I sure

wish I had a Microsoft PIX-style graphics debugger! It would have been very

helpful during those head-scratching “why is the screen black” debugging

sessions.