05 Jan 2015

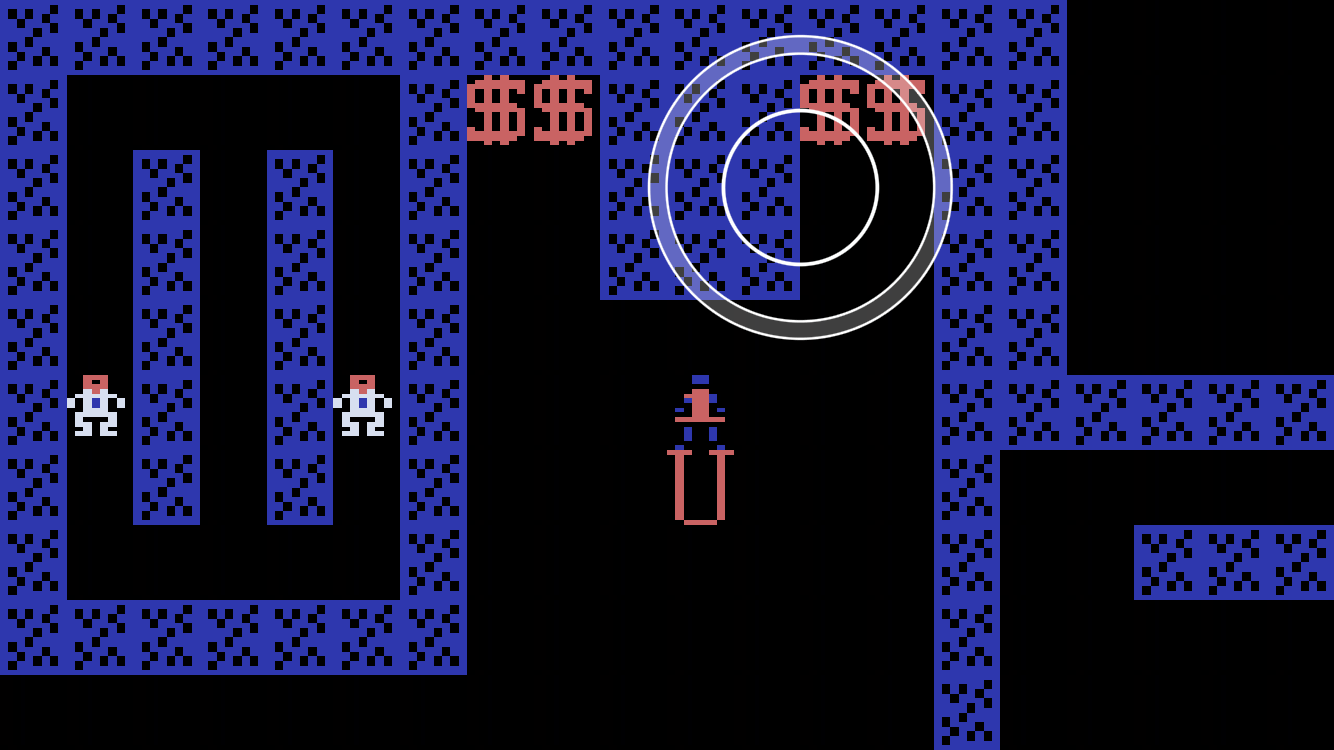

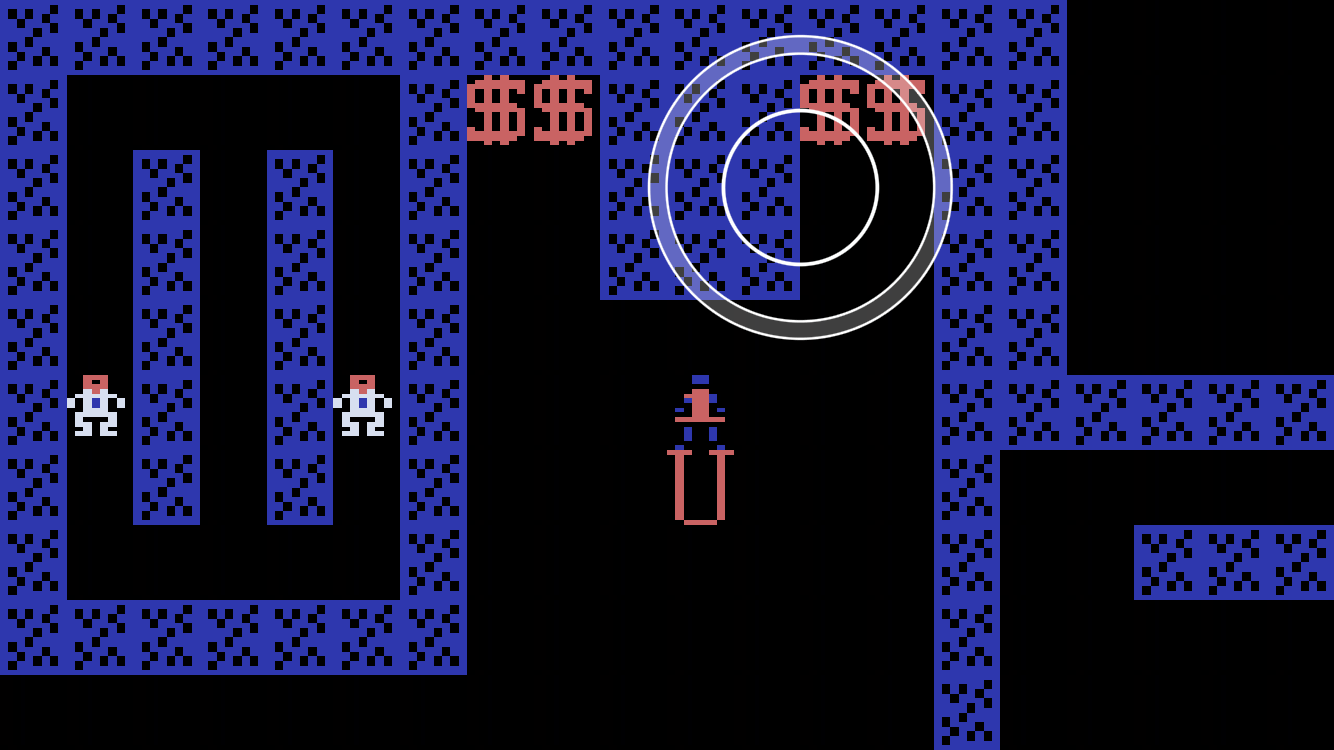

There are a total of 3 draw calls and 2 textures in this scene:

The whole tile map is rendered as a single draw call: a single 2-triangle tile

that’s instanced Row x Column times, using a 3D texture as a texture atlas. I

originally used point sprites, but switched to instanced triangles because I

wanted to use non-square tiles.

The virtual joystick is rendered as two coarse triangle strip rings, using a

1D radial texture. Note the anti aliasing. (I could have used quads, but

wanted to minimize overdraw.)

So far Metal has been fairly straightforward to use, at least for someone like

me coming from a DirectX 9 / Xbox 360 / Android OpenGL ES 2.0 background.

02 Jan 2015

At my house we are trending towards having N+1 laptops for N people, because

(a) I need to keep my work laptop separate from my home laptop, and (b)

frequently everyone in the family wants to use their laptops at the same

time.

The same goes for tablets, and when the kids are old enough to have phones I

expect it will be the same for phones.

I tried using multi-user accounts on shared family tablets and laptops, but

ended up assigning each kid their own devices. It was simpler from an account

management point of view, and the kids like personalizing their devices with

stickers and cases.

Having assigned devices also makes it easier to give different Internet and

gaming privileges to different kids, depending on age and maturity.

A downside of assigned devices is that not all the devices have the same

features. People complain about hand-me-down devices, as well as the perverse

incentive created when an accidentally broken device is replaced by a brand

new, better device.

02 Jan 2015

When I started working on Android in 2007, I had never owned a mobile phone.

When Andy Rubin heard this, he looked at me, grinned, and said “man, you’re on

the wrong project!”

But actually, being late to mobile worked out well. In the early days of

Android the daily build was rough. Our Sooner and

G1 prototypes often wouldn’t work

reliably as phones, and that drove the other Android developers crazy. But

since I was not yet relying on a mobile phone, it didn’t bother me much.

Seven years later, mobile’s eaten the world.

But I still haven’t internalized what that means. I think I’m still too

personal-computer-centric in my thinking and my planning.

Here’s some recent changes that I’m still trying to come to grips with:

- Android and iOS are the important client operating systems. The web is now a legacy system.

- Containerized Linux is the important server operating system. Everything else is legacy.

- OS X is the important programmer’s desktop OS (because it’s required for iOS development, and adequate for Android and containerized Linux development.)

- The phone is the most important form factor, with tablet in second place.

- Media has moved from local storage to streaming.

- Programming cultural discussion has moved from blogs & mailing lists to Hacker News, Reddit & Twitter. (To be fair, these new forums mostly link back to blog posts for the actual content.)

In reaction, I’ve stopped working on the following projects:

- Terminal Emulator for Android. When I started this project, all Android devices had hardware keyboards. But those days are long gone. And unfortunately for most people there isn’t a compelling use case for an on-the-device terminal emulator. The compelling command-line use cases for mobile are SSH-ing from the mobile device to another machine, and adb-ing into the Android device from a desktop.

- BitTorrent clients. My clients were written just for fun, to learn how to use the Golang and node.js networking libraries. With the fun/learning task accomplished, and with BitTorrent usage in decline, there isn’t much point in working on these clients. (Plus I didn’t like dealing with bug reports related to sketchy torrent sites.)

- New languages. For the platforms I’m interested in, the practical languages are C/C++, Java, Objective C, and Swift. (And Golang for server-side work.)

- I spent much of the past seven years experimenting with dynamic languages, but a year of using Python and JavaScript in production was discouraging. The brevity was great, but the loss of control was not.

Personal Projects for 2015

First, I’m going to port my ancient game Dandy to mobile. It needs a lot of

work to “work” on mobile, but it’s a simple enough game that the port should

be possible to do on a hobby time budget. I’m probably going to go closed-

source on this project, but I may blog the progress, because the process of

writing down my thoughts should be helpful.

After that, we’ll see how it goes!

02 Jan 2015

I’ve been reading Game Programming Patterns by Bob Nystrom.

It’s available to read online for free, as well as for

purchase in a variety of formats.

A good book for people who are writing a video game engine. I found myself

agreeing with pretty much everything in this book.

Note - this book is about internal software design. It’s not about game

design, or graphics, physics, audio, input, monetization strategies, etc. So

you won’t be able to write a hit video game after reading this book. But if

you happen to be writing an engine for a video game, this book will help you

write a better one.

Edit – and I’ve finished reading it. It was a quick read, but a good one. I

consider myself an intermediate level game developer. I’ve written a few

simple games and I’ve worked on several other games. (For example, I’ve ported

Quake to many different computers over the years.)

For me the most educational chapters were Game Loop and

Component, although

Bytecode and

Data Locality were also

quite interesting.

I like that the chapters have links to relevant external documents for further

research.

I felt smarter after reading this book.

02 Jan 2015

It’s been a while since my last post – I’ve been posting inside the Google

internal ecosystem, but haven’t posted much publicly.

What have I been up to in the past 3 years?

Work

Prototyped a Dart runtime for Android. Amusingly enough, this

involved almost no Dart code. It was 90% Python coding (wrangling Gyp build system scripts) and 10% C++ coding

(calling the Dart VM).

Extended the Audio players for Google Play Music’s Web client. I learned

ActionScript, the Closure dialect of JavaScript, and HTML5 Audio APIs (Web Audio and EME.)

Started working on the Google Play Music iOS client.

I learned Objective C, Swift, iOS and Sqlite.

Personal Projects

Prototyped a Go language runtime for Android. Unpublished, but luckily the

Go team is picking up the slack.

Finished working on Terminal Emulator for Android.

I’m keeping it on life support, but no new features.

Personal Life

Started exercising again after a 10 year hiatus. It’s good to get back into shape.

Switched to a low cholesterol diet. Google’s cafes make this pretty easy to do.

Watched my kids grow!

12 Sep 2011

Today I backed up all my family pictures and videos to Picasa Web Albums.

For several months I have been thinking of ways to backup my pictures to

somewhere outside my house. I wanted something simple, scalable and

inexpensive.

When I read that

Google+ users can store unlimited pictures sized <= 2048 x 2048 and videos <= 15 minutes long,

I decided to try using Google+ to back up my media.

Full disclosure: I work for Google, which probably predisposes me to like and

use Google technologies.

Unfortunately I had my pictures in so many folders that it wasn’t very

convenient to use either Picasa or

Picasa Web Albums Uploader to upload them.

Luckily, I’m a programmer, and Picasa Web Albums has a public API for

uploading images. Over the course of an afternoon, I wrote a Python script to

upload my pictures and videos from my home computer to my Picasa Web Album

account. I put it up on GitHub:

picasawebuploader

Good things:

- The Google Data Protocol is easy to use.

- Python’s built-in libraries made file and directory traversal easy.

- OSX’s built-in “sips” image processing utility made it easy to scale images.

Bad things:

- The documentation for the Google Data Protocol is not well organized or comprehensive.

- It’s undocumented how to upload videos. Luckily I found a Flicker-to-Picasa-Web script that showed me how.

To do:

- Use multiple threads to upload images in parallel.

- Prompt for password if not supplied on command line.

20 Dec 2010

I wrote an asynchronous directory tree walker in

node.js. Note the use of Continuation Passing Style in

the fileCb callback. That allows the callback to perform its own asynchronous

operations before continuing the directory walk.

(This code is provided under the Apache Licence

2.0.)

// asynchronous tree walk

// root - root path

// fileCb - callback function (file, next) called for each file

// -- the callback must call next(falsey) to continue the iteration,

// or next(truthy) to abort the iteration.

// doneCb - callback function (err) called when iteration is finished

// or an error occurs.

//

// example:

//

// forAllFiles('~/',

// function (file, next) { sys.log(file); next(); },

// function (err) { sys.log("done: " + err); });

function forAllFiles(root, fileCb, doneCb) {

fs.readdir(root, function processDir(err, files) {

if (err) {

fileCb(err);

} else {

if (files.length > 0) {

var file = root + '/' + files.shift();

fs.stat(file, function processStat(err, stat) {

if (err) {

doneCb(err);

} else {

if (stat.isFile()) {

fileCb(file, function(err) {

if (err) {

doneCb(err);

} else {

processDir(false, files);

}

});

} else {

forAllFiles(file, fileCb, function(err) {

if (err) {

doneCb(err);

} else {

processDir(false, files);

}

});

}

}

});

} else {

doneCb(false);

}

}

});

}

12 Nov 2010

My kids’ favorite piece of educational software is

“Clifford The Big Red Dog Thinking Adventures” by

Scholastic. We received it as a hand-me-down from their cousins. The CD was

designed for Windows 95-98 and pre-OSX Macintosh. Unfortunately, it does not

run on OS X 10.6. (I think it fails to run because it uses the PowerPC

instruction set and Apple dropped support for emulating that instruction set

in 10.6.)

After some trial and error, I found the most reliable way to run Clifford on

my Mac was:

- Install VMWare Fusion.

- Install Ubuntu 10.10 32-bit in a Virtual Machine.

- Install the VMWare Additions to make Ubuntu work better in the VM.

- Install Wine on Ubuntu 10.10. (Wine provides partial Windows API emulation for Linux.)

- Configure the audio for Wine. (I accepted the defaults.)

- Insert the Clifford CD and manually run the installer.

The result is that the Clifford game runs full screen with smooth animation

and audio, even on my lowly first-generation Intel Mac Mini.

You may scoff at all these steps, but (a) it’s cheap, and (b) it’s less work

than installing and maintaining a Windows PC or VM just to play this one game.

(It’s too bad there isn’t a Flash, HTML5, Android or iOS version of this game,

it’s really quite well done.)

06 Sep 2010

This Labor Day weekend I decommissioned my tower PC.

If memory serves, I bought it in the summer of 2002, in one last burst of PC

hobby hacking before the birth of my first child. It’s served me and my

growing family well over the years, first as my main machine, later as a media

server.

But the world has changed, and it doesn’t make much sense to keep it running

any more. It’s been turned off for the last year while I traveled to Taiwan.

In that time I’ve learned to live without it.

The specific reasons for decommissioning it now are:

- It needs a new CMOS battery.

- It needs a year’s worth of Vista service packs and security updates.

- I don’t use the media center feature any more.

- After a year without a Windows machine I don’t want to maintain one any more.

- It’s too noisy and uses too much energy to leave on as a server.

The decommissioning process

I booted it, and combed through the directories. I carefully copied off all

the photos, code projects, email and documents that had accumulated over the

years. Lots of good memories!

GMail Import

I discovered some old Outlook Express message archives. I wrote a Python

script to import them into GMail. I didn’t like any of the complicated recipes

I found on the web, so I did it the easy way: I wrote a toy POP3 server in

Python that served the “.eml” messages from the archive directory. Once the

toy POP3 server was running, GMail was happy to import all the email messages

from my server.

Erasing the disks with Parted Magic

Once I was sure I had copied all my data off the machine it was time to erase

the disks. I erased the disks using the

ATA Secure Erase command. I did

this using a small Linux distro, Parted Magic. I

downloaded the distribution ISO file and burned my own CD. Once the CD was

burned, I just rebooted the PC and it loaded Parted Magic from the CD.

Parted Magic has a menu item, “Erase Disk”. It lets you choose the disk and

the erase method. The last method listed is the one I used. The other methods

work too, but they don’t use the ATA Secure Erase command.

It took quite a while to erase the disks. Each disk took about two hours to

erase. (They took 200 GB per hour, to be precise.) Unfortunately, due to the

limitations of my ATA drivers, I had to erase them one at a time.

While I think the ATA Secure Erase command is the fastest and most reliable

way to erase modern hard disks, there is another way: Instead of having the

drive erase itself, you can copy data over every sector of the drive. The

advantage of this technique is that it also works with older drives. If you

choose to go this route, one of the easiest way to do it is to use

Darik’s Boot and Nuke utility. This is a Linux distro that does

nothing besides erasing all the hard disks of the computer you boot it on. You

just boot the CD, then type “autonuke” to erase every disk connected to your

computer.

Disposing of the computer

It’s still a viable computer, so I will probably donate it to a local charity.

The charity has a rule that the computer you donate must be less than 5 years

old. I think while some parts of the computer are older than that, most of the

parts are young enough to qualify.

Reflections on the desktop PC platform

Being able to upgrade the PC over the years is a big advantage of the

traditional desktop tower form factor. Here’s how I upgraded it over the

years:

Initial specs (2002)

- Windows XP

- ASUS P4S533 motherboard

- Pentium 4 at 1.8 GHz

- ATI 9700 GPU

- Dell 2001 monitor (1600 x 1200)

- 256 MB RAM

- 20 GB PATA HD

- Generic case

Final specs (2010)

- Windows Vista Ultimate

- ASUS P4S533 motherboard

- Pentium 4 at 1.8 GHz

- ATI 9700 GPU

- Dell 2001 monitor (1600 x 1200)

- 1.5 GB RAM

- 400 GB 7200 RPM PATA HD

- 300 GB 7200 RPM PATA HD

- ATI TV Theater analog capture card

- Linksys Gigabit Ethernet PCI card

- Antec Sonata quiet case

I think these specs show the problems endemic to the desktop PC market.

Although I didn’t know it at the time, the PC platform had already plateaued.

In eight years of use I wasn’t motivated to upgrade the CPU, motherboard,

monitor, or GPU. If I hadn’t been a Microsoft employee during part of this

time I wouldn’t have upgraded the OS either.

What killed the PC for me wasn’t the hardware wearing out, it was mission

change. Like most people I now use the web rather than the local PC for most

of my computer activity. Maintaining the local PC is pure overhead, and I’ve

found it’s easier to maintain a Mac than a PC.

It was nostalgic to turn on the PC again and poke through the Vista UI. It

brought back some good memories. (And I discovered some cute pictures of my

kids that I’d forgotten about.)

Thank you trusty PC. You served me and my family well!

29 Aug 2010

It’s been a year since

I bought my fancy car stereo.

I wanted to mention that I’ve ended up not using most of the fancy features.

- The built-in MP3 player had issues with my 1000-song / 100 album / 4 GB music collection:

- It took a long time to scan the USB storage each time the radio turned on.

- It was difficult to navigate the album and song lists.

- Chinese characters were not supported.

- It took a long time to “continue” a paused item, especially a long item like a podcast.

- The bluetooth pairing only worked with one phone at a time, which was awkward in a two-driver family.

Here’s how I use it now:

- I connect my phone using both the radio’s USB (so the phone is being charged) and mini stereo plugs.

- I use the phone’s built-in music player.

- I have the radio set to AUX to play the music from the stereo input plug.

It’s a little bit cluttered, because of the two cables, but it gets the job

done. The phone’s music player UI is so much better than the radio’s UI.

In effect I have reduced the car stereo to serving as a volume control, an

amplifier, and a USB charger.