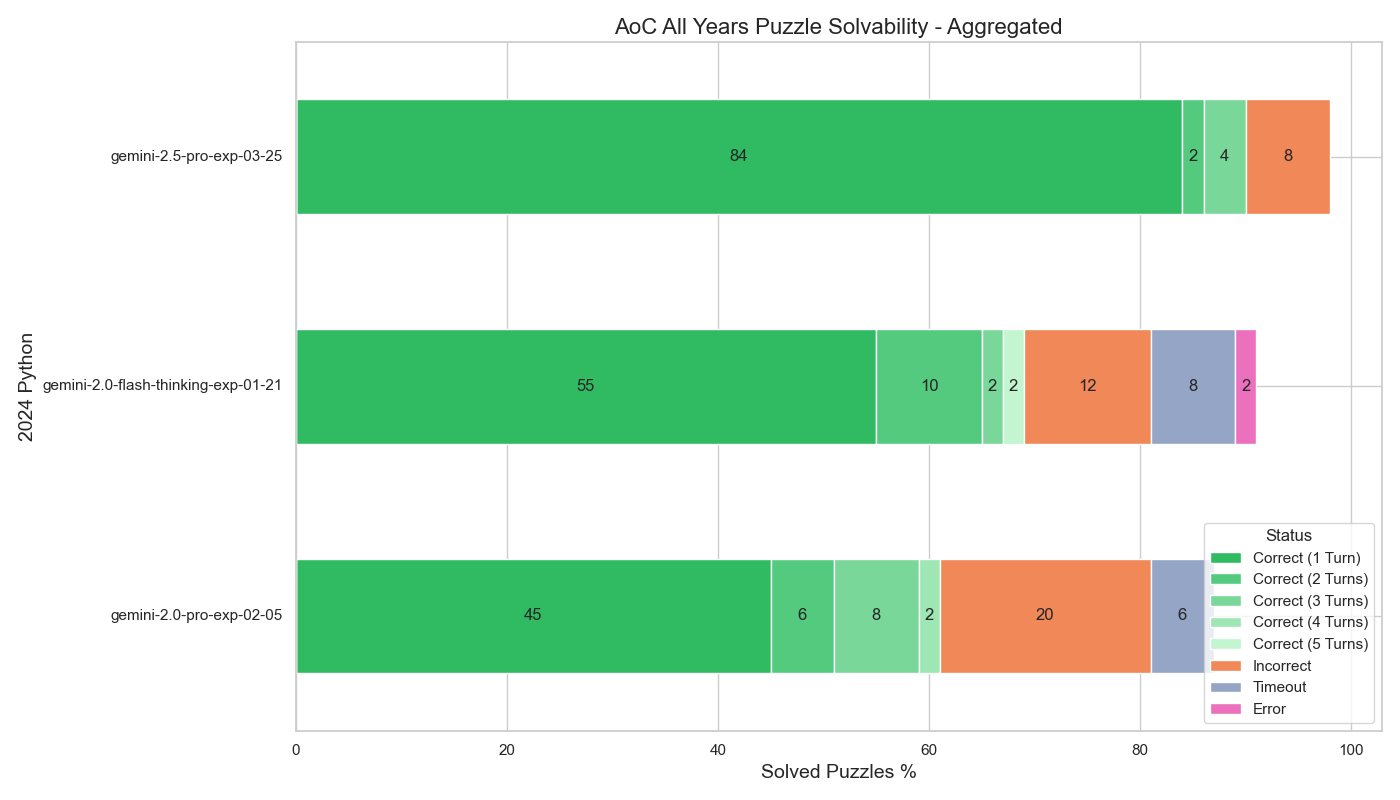

The new Gemini 2.5 Pro model from Google is very good at solving Advent of Code 2024 puzzles using many different programming languages.

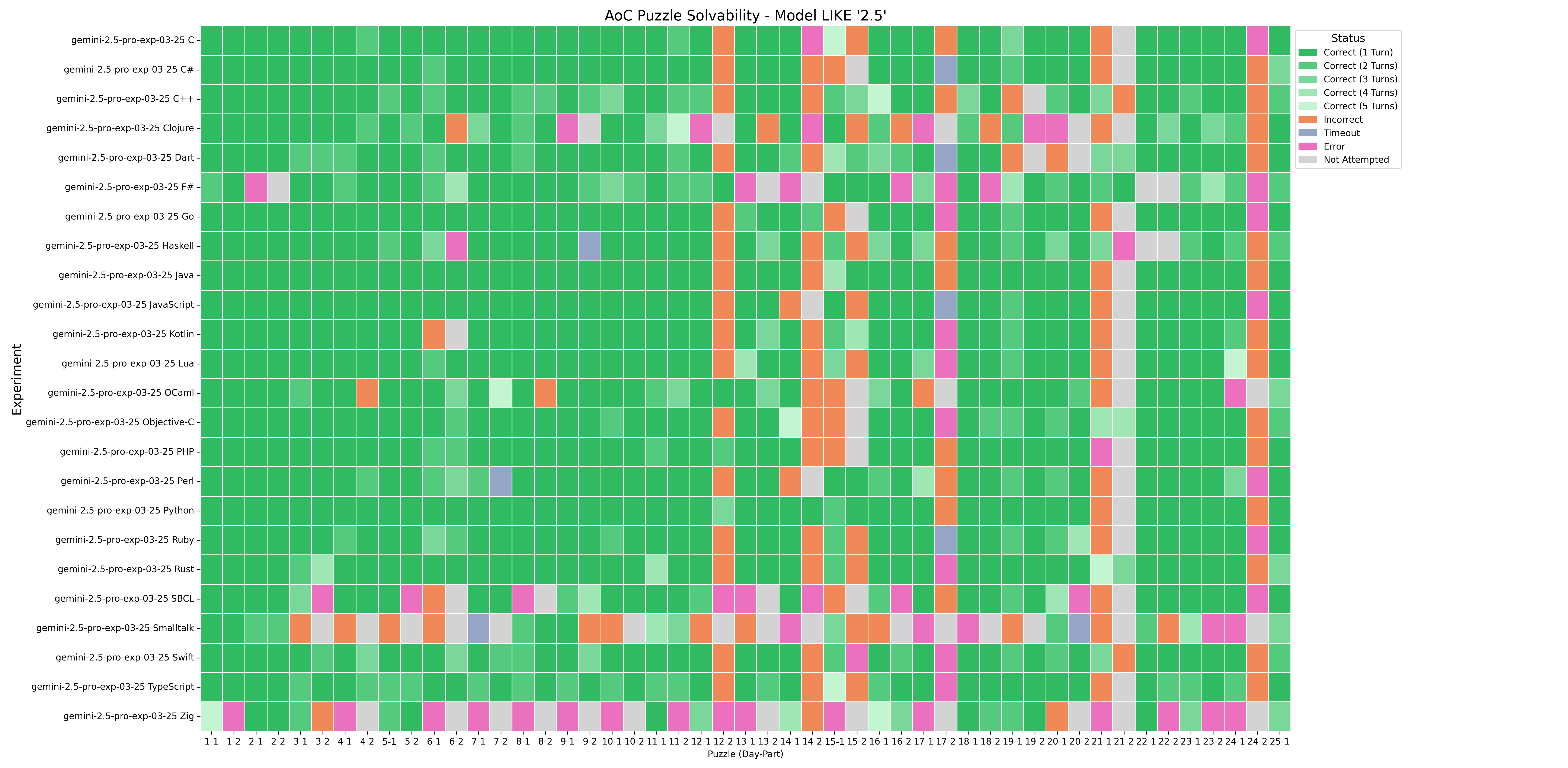

Performance by Puzzle

The puzzles that were difficult for Gemini 2.5 Pro were:

This puzzle has a difficult to understand specification. It is easy to solve if you use the correct algorithm.

Day 14 Part 2: Restroom Redoubt

To solve this puzzle you have to either get lucky or reason from first principles. Gemini 2.5 did solve this one time, very cleanly.

This was a Sudoku-style sliding box simulation. Part 1 is easy to solve by using recursion, but difficult to solve using iteration. Gemini typically tries to solve using iteration. Gemini 2.5 Pro was the first Gemini model that I tested that was able to solve part 1 by implementing the able-to-push check recursively.

All Gemini models, including Gemini 2.5 Pro, had trouble with Part 2, as the rules for part 2 are assymetrical with respect to horizontal vs vertical movement.

Day 17 Part 2: Chronospatial Computer

Solving this requires examining the puzzle input and reasoning about it. Gemini was not presented with the puzzle input, so it could not reason about it. This was a limitation of the LLM evaluation setup, and was not a fair test of the model’s ability.

Day 21 Part 2: Keypad Conundrum

Solving part 2 in a reasonable time requires thinking at a higher level, not just brute-force simulating the puzzle.

Solving part 2 requires examining the puzzle input and reasoning about it. Gemini was not presented with the puzzle input, so it could not reason about it. This was a limitation of the LLM evaluation setup, and was not a fair test of the model’s ability.

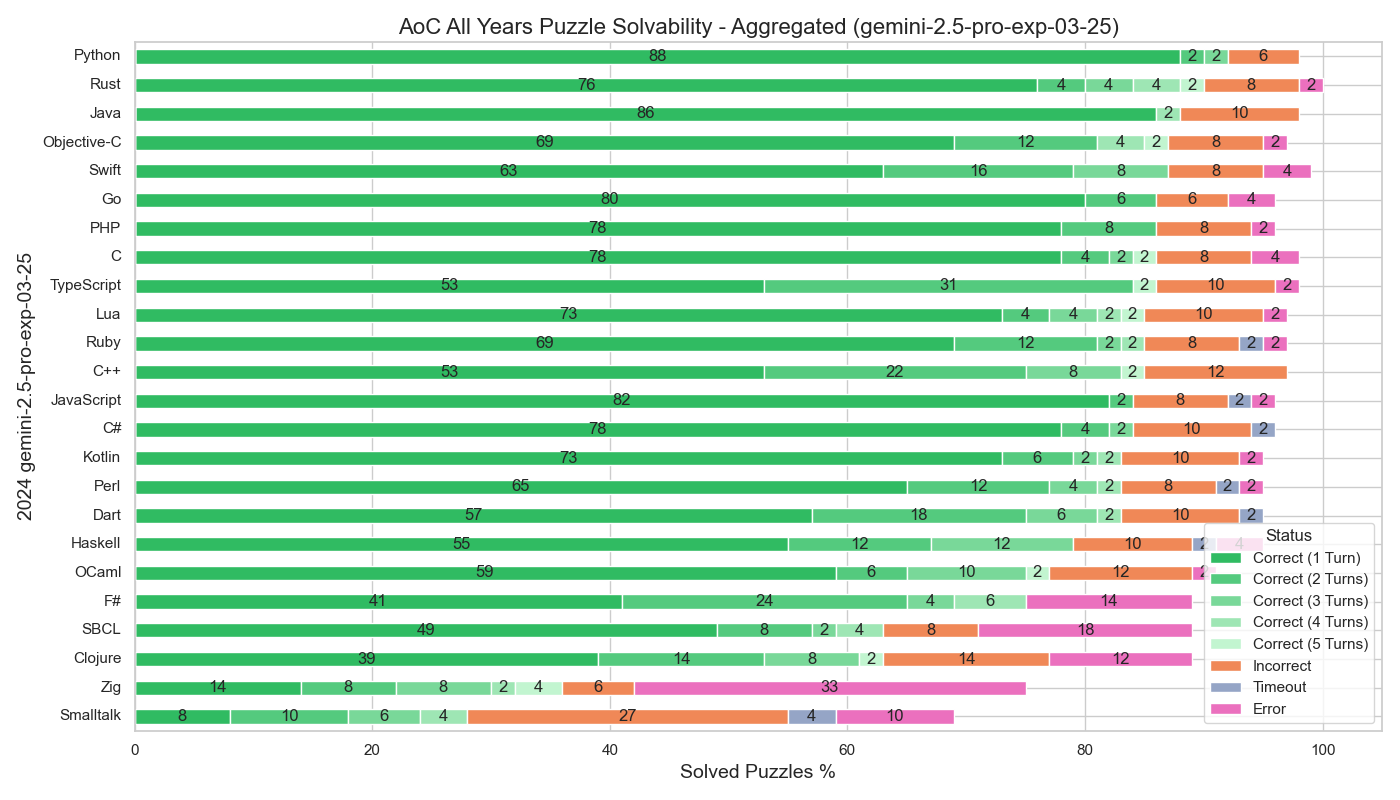

Performance by Language

Gemini 2.5 Pro performed best using Python. This is typical of LLMs, and it’s also reasonable due to Advent of Code being targeted towards Python programmers.

Gemini 2.5 Pro performed nearly as well on many other languages.

The languages it had trouble with were:

| Language | Issues |

|---|---|

| Haskell | Trouble using language extensions. |

| OCaml | Trouble with library semantics |

| F# | Trouble with language syntax, library semantics. |

| SBCL | Trouble matching parens |

| Clojure | Trouble matching parens, trouble with libraries. |

| Zig | Trouble using type system, trouble reading error messages. |

| GNU Smalltalk | Didn’t know the library. |

It is interesting to compare Rust to Zig performance. Rust is a difficult to use langauge, but there are a lot of examples on the web of Rust being used to solve coding puzzles.

It seems that Zig’s error reporting syntax, where errors are reported relative to the first line of the function rather than the first line of the source file, makes it hard for Gemini 2.5 to reason about the error messages.

More conversation turns would help

For many languages, we see that at least one puzzle was solved on the 5th turn of the conversation. That indicates that it is likely that the model could solve additional puzzles if given more converstation turns.

Possibility of memorization

Gemini 2.5 Pro’s knowledge cutoff is January 2025, which was after the Advent of Code 2024 contest was held. It is possible that information about the puzzles and their solutions was part of the training data for the model.

While it’s not possible to rule memorization out, there is not strong evidence in favor of memorization.

Links

Using Gemini to Solve Advent of Code 2024 Puzzles - a paper measuring how well Gemini 2.0 Flash Thinking solves Advent of Code 2024 puzzles.